- publication

- SIGGRAPH 2022 (journal paper)

- authors

- Peizhuo Li, Kfir Aberman, Zihan Zhang, Rana Hanocka, Olga Sorkine-Hornung

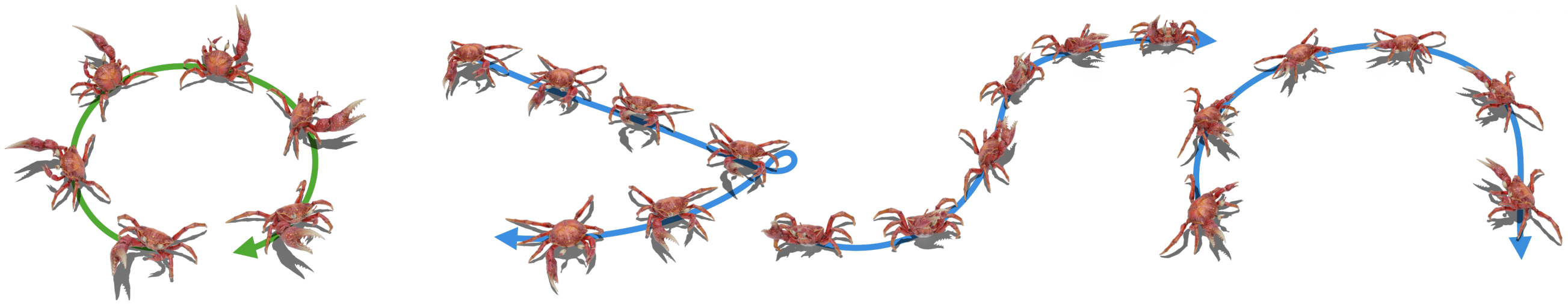

Given a single training motion sequence of skeleton with arbitrary topology (green), our method learns to synthesize novel motion sequences (blue).

abstract

We present GANimator, a generative model that learns to synthesize novel motions from a single, short motion sequence. GANimator generates motions that resemble the core elements of the original motion, while simultaneously synthesizing novel and diverse movements. Existing data-driven techniques for motion synthesis require a large motion dataset which contains the desired and specific skeletal structure. By contrast, GANimator only requires training on a single motion sequence, enabling novel motion synthesis for a variety of skeletal structures e.g., bipeds, quadropeds, hexapeds, and more. Our framework contains a series of generative and adversarial neural networks, each responsible for generating motions in a specific frame rate. The framework progressively learns to synthesize motion from random noise, enabling hierarchical control over the generated motion content across varying levels of detail. We show a number of applications, including crowd simulation, key-frame editing, style transfer, and interactive control, which all learn from a single input sequence. Code and data for this paper are at https://peizhuoli.github.io/ganimator.

downloads

video

acknowledgments

We thank Andreas Aristidou and Sigal Raab for their valuable inputs that helped to improve this work. This work is supported in part by the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (ERC Consolidator Grant, agreement No. 101003104, MYCLOTH).