- publication

- EUROGRAPHICS 2021

- authors

- Thomas Wolf, Victor Cornillère, Olga Sorkine-Hornung

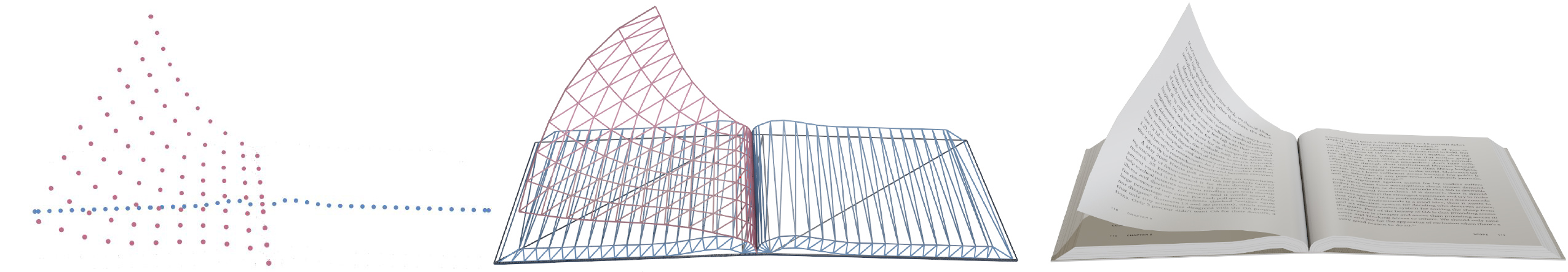

Our book simulation employs a discrete developable surface to animate paper pages. The system comprises a fully interactive page and page blocks representing the rest of the book. This separation enables a realistic and interactive simulation running in real time on low compute power devices, such as mobile phones.

abstract

Reading books or articles digitally has become accessible and widespread thanks to the large amount of affordable mobile devices and distribution platforms. However, little effort has been devoted to improving the digital book reading experience, despite studies showing disadvantages of digital text media consumption, such as diminished memory recall and enjoyment, compared to physical books. In addition, a vast amount of physical, printed books of interest exist, many of them rare and not easily physically accessible, such as out-of-print art books, first editions, or historical tomes secured in museums. Digital replicas of such books are typically either purely text based, or consist of photographed pages, where much of the essence of leafing through and experiencing the actual artifact is lost. In this work, we devise a method to recreate the experience of reading and interacting with a physical book in a digital 3D environment. Leveraging recent work on static modeling of freeform developable surfaces, which exhibit paper-like properties, we design a method for dynamic physical simulation of such surfaces, accounting for gravity and handling collisions to simulate pages in a book. We propose a mix of 2D and 3D models, specifically tailored to represent books to achieve a computationally fast simulation, running in real time on mobile devices. Our system enables users to lift, bend and flip book pages by holding them at arbitrary locations and provides a holistic interactive experience of a virtual 3D book.

downloads

videos

acknowledgments

We are grateful Aude Delerue and Patrick Roppel for the inspiration for this project and the insightful discussions. We also thank Michael Rabinovich for helpful discussions.