- publication

- ACM Transactions on Graphics

- authors

- Oliver Glauser, Daniele Panozzo, Otmar Hilliges, Olga Sorkine-Hornung

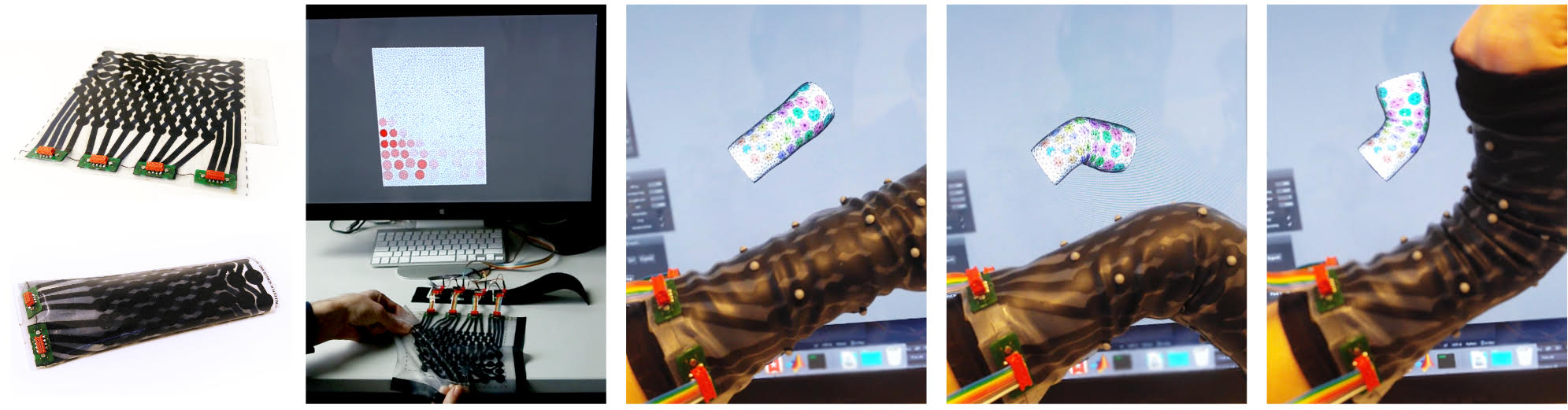

We propose a method for the fabrication of soft and stretchable silicone based capacitive sensor arrays. The sensor provides dense stretch measurements that, together with a data-driven prior, allow for the capture of surface deformations in real-time and without the need for line-of-sight.

abstract

We propose a hardware and software pipeline to fabricate flexible wearable sensors and use them to capture deformations without line of sight. Our first contribution is a low-cost fabrication pipeline to embed multiple aligned conductive layers with complex geometries into silicone compounds. Overlapping conductive areas from separate layers form local capacitors that measure dense area changes. Contrary to existing fabrication methods, the proposed technique only requires hardware that is readily available in modern fablabs. While area measurements alone are not enough to reconstruct the full 3D deformation of a surface, they become sufficient when paired with a data-driven prior. A novel semi-automatic tracking algorithm, based on an elastic surface geometry deformation, allows to capture ground-truth data with an optical mocap system, even under heavy occlusions or partially unobservable markers. The resulting dataset is used to train a regressor based on deep neural networks, directly mapping the area readings to global positions of surface vertices. We demonstrate the flexibility and accuracy of the proposed hardware and software in a series of controlled experiments, and design a prototype of wearable wrist, elbow and biceps sensors, which do not require line-of-sight and can be worn below regular clothing.

downloads

- Paper (official version on dl.acm.org)

- Video

- BibTex entry

accompanying video

acknowledgments

We would like to thank Denis Butscher, Christine de St. Aubin, Raoul Hopf, Manuel Kaufmann, Roi Poranne, Samuel Rosset, Michael Rabinovich, Riccardo Roveri, Herbert Shea, Rafael Wampfler, Yifan Wang, Wilhelm Woigk, Shihao Wu and Ji Xiabon for their assistance in the fabrication, with the experiments and for insightful discussions and Seonwook Park, Velko Vechev and Katja Wolff for their help with the video. This work was supported in part by the SNF grant 200021_162958, the NSF CAREER award IIS-1652515, the NSF grant OAC:1835712, and a gift from Adobe.